Team disquantified” has entered workplace conversations as a shorthand for shifting how teams are measured: away from single-minded, numerical KPIs and toward a mix of quantitative signals and structured human judgment.

That phrase can sound academic or slangy, but the underlying idea is practical and urgent. Organizations that rely solely on raw metrics often see unintended side effects — gaming, short-termism, burnout, and blind spots around creativity and collaboration. Conversely, entirely qualitative approaches risk inconsistency and bias.

This article explains what the concept means in practice, why it matters (through the lens of Expertise, Experience, Authoritativeness, and Trustworthiness), and how to design a repeatable, governed framework that preserves accountability while capturing the human, contextual signals numbers miss.

You’ll get clear definitions, benefits and risks, a six-step implementation roadmap, tools and templates that work, an applied example for product teams, plus five concise “People also ask” FAQs to answer common leader questions.

1. What “disquantified” really means (practical definitions)

At its clearest, “disquantified” is shorthand for reducing the dominance of raw numbers in performance evaluation and decision-making — not abandoning data, but balancing it with structured qualitative inputs. There are three practical variants you’ll encounter:

- Human-centered hybridization: Keep a small set of core metrics, but pair each with regular qualitative context (stories, rubrics, peer narratives). Metrics inform; narratives explain.

- Contextual measurement: Translate behaviors and outcomes into richer, contextual metrics (for example, a quality score plus an explanation) so numbers carry meaning rather than dictating behavior.

- Rejection of KPI tyranny: Purposefully lower the weight of rigid KPIs that encourage gaming or short-term optimization, shifting evaluation toward learning, craftsmanship, and team health.

The useful common ground is simple: design assessment systems that use numbers to highlight signals and use narrative to interpret them. The worst misstep is swapping math for vagueness; the goal is structured human judgment alongside selective metrics.

2. Why this matters informed rationale

A balanced framework must satisfy more than convenience; it must be credible. Using an lens (Expertise, Experience, Authoritativeness, Trustworthiness):

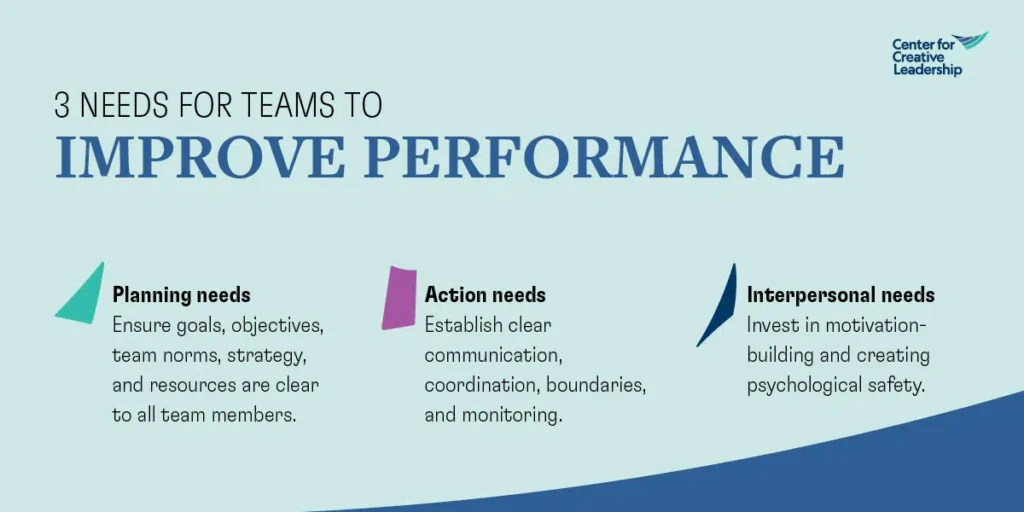

- Expertise: Research and practitioner experience show that many valuable outcomes — psychological safety, collaboration, innovation — are poorly captured by straightforward metrics. Experts recommend combining short, meaningful quantitative signals with qualitative evaluation to surface these outcomes reliably.

- Experience: Practitioners who’ve piloted hybrid systems report higher team engagement, better long-term outcomes, and fewer perverse incentives. Structured qualitative inputs (rubrics, anchored scales, narratives) are repeatedly highlighted as the parts that make subjectivity usable.

- Authoritativeness: Leaders who transparently document methods, rubrics, and governance earn stakeholder trust more quickly than those who merely declare “we’re moving away from metrics.” Authority comes from repeatability: published criteria, training for reviewers, and documented pilot results.

- Trustworthiness: Hybrid systems that publish aggregate themes (not raw comments) and that have clear escalation rules for underperformance earn trust. Trust also requires measurement of the measurement system itself — inter-rater reliability, bias audits, and continuous improvement.

In short, the frame makes “disquantified” defensible and operational rather than trendy.

3. Benefits — what you gain when you do it right

When a team moves from metric-dominant to balanced evaluation, these benefits commonly follow:

- Richer decision-making: Numbers flag anomalies; narratives explain causes and trade-offs. Leaders make better, more nuanced decisions.

- Reduced gaming: With qualitative context and a small set of balanced metrics, teams have less incentive to optimize for a single number.

- Healthier teams: When evaluation recognizes collaboration, learning, and psychological safety, intrinsic motivation improves and turnover often decreases.

- Stronger long-term outcomes: Innovation and customer-focused improvements that take time get recognized rather than penalized for not moving a short-run metric.

- Greater adaptability: Hybrid systems capture experiment learnings and tacit knowledge that raw metrics miss.

These gains aren’t automatic — they require design, training, and governance to avoid bias and vagueness.

4. Risks — what to avoid and how to mitigate

The shift introduces real risks unless proactively managed:

- Subjectivity and bias: Without structure, qualitative reviews devolve into opaque opinions. Mitigate with rubrics, anchored examples, reviewer calibration, and rotating reviewers.

- Loss of comparability: If you remove metrics wholesale, it becomes hard to compare performance across teams or time. Keep a small core of comparable metrics for baselining.

- Executive pushback: Stakeholders who expect hard numbers will resist. Mitigate by offering pilots, clear success criteria, and a plan to show measurable improvements in business outcomes.

- Complexity creep: Combining many qualitative inputs with many metrics creates noise. Keep the framework deliberately lightweight and focused on 3–6 core outcomes.

- Signal dilution: Too many signals dilute attention; counter by pairing each key outcome with one concise quantitative indicator and one structured qualitative input.

Anticipate these risks up front; your governance plan is the safety net.

5. A six-step roadmap to implement a balanced framework

Use this pragmatic roadmap to move from idea to pilot:

- Clarify the problem and the “why.” Document which metric behaviors you want to reduce and which human outcomes you want to surface (e.g., innovation, quality, team health).

- Define 3–6 essential outcomes. Keep outcomes crisp and measurable by proxy (e.g., customer satisfaction, time-to-value, defect severity, team learning).

- Choose hybrid indicators. For each outcome, pair a single quantitative signal with a structured qualitative input. Example: “Customer satisfaction” = NPS trend (quant) + three customer story summaries (qual).

- Design rubrics and anchors. Create behaviorally anchored scales for qualitative inputs with concrete examples for each level. Train reviewers on these anchors.

- Set governance and transparency rules. Define how signals trigger actions (coaching, resource review, performance management), what will be published (aggregate themes, rubrics), and who evaluates edge cases.

- Pilot and measure the measurement. Run a time-boxed pilot (one quarter), compare against control groups, and collect meta-metrics: inter-rater agreement, bias signals, and stakeholder satisfaction.

Iterate rapidly: the framework should evolve, not be handed down as a finished doctrine.

6. Tools and practical methods that work

You don’t need exotic tech to do this well. Common, effective methods include:

- Pulse surveys + thematic analysis: Short, frequent pulses that emphasize free-text themes and trend analysis rather than raw scores.

- Rubric-based peer reviews: Structured templates with behavior examples that reduce variance and bias.

- Outcome journals / experiment logs: Teams record major decisions, hypotheses, experiments, and learnings; leaders review these for context.

- Small selective dashboards: No more than five core metrics, each linked to a short contextual note explaining recent shifts.

- Calibration sessions: Quarterly sessions where reviewers align on rubric application using real anonymous examples.

- Meta-measurement: Regular audits of rater consistency, demographic bias, and reliability scores.

Adoption is cultural as much as technical; invest in training and storytelling.

7. Example: Applying the framework in a product team (sample plan)

Scenario: A product organization is suffering from “feature velocity” fixation. Engineers push features fast to hit velocity KPIs but customer satisfaction and product stability suffer.

Plan:

- Outcomes: Customer satisfaction, product quality, team learning.

- Hybrid indicators:

- Customer satisfaction = NPS trend (quant) + monthly customer story highlight (qual).

- Product quality = production defect rate (quant) + bi-weekly post-deployment review note (qual).

- Team learning = number of executed experiments (quant) + experiment journal summaries (qual).

- Rubrics: Define what “excellent,” “satisfactory,” and “needs improvement” look like for experiment design, code quality, and incident response.

- Governance: If quality qualitative signals indicate systemic issues, trigger a resource review; if quantitative signals breach thresholds, trigger operational remediation.

- Pilot: Run with two squads for a quarter, measure NPS, defect counts, and team engagement versus control squads.

This concrete pairing ensures leaders see numbers and understand the story behind them.

8. How to communicate the change internally and externally

Language matters. Avoid jargon-heavy phrases that can confuse stakeholders. Use simple, concrete terms like “balanced metrics and narrative review” or “hybrid performance framework.” When presenting:

- Show the problem with current metrics and evidence (anecdotes + data).

- Describe the outcomes you’ll protect and the precise indicators you’ll use.

- Present a short pilot plan with defined decision rules and success metrics.

- Commit to transparency about rubrics and governance while protecting individual privacy.

- Offer a timeline and a way for stakeholders to escalate concerns.

Clarity and early wins are your best allies when driving cultural change.

FAQs

1. How do I implement a disquantified approach without losing accountability?

Pair each core outcome with one quantitative indicator and one structured qualitative input, define explicit governance rules for action, and publish rubrics so decisions are traceable.

2. What qualitative inputs work best?

Behaviorally anchored peer reviews, pulse survey themes, customer story summaries, experiment journals, and short retrospective note templates work well when structured and trained.

3. Won’t removing metrics hurt performance reviews?

Not if you replace raw metrics with consistent qualitative evidence plus a few targeted numbers. Train raters, use rubrics, and keep at least one comparative metric for fairness.

4. How do I convince executives who want hard numbers?

Propose a time-boxed pilot with clear success criteria, show how hybrid signals reduce perverse incentives, and prepare to report both business outcomes and meta-metrics about evaluation reliability.

5. Is “disquantified” the right term to use in official documents?

Prefer clearer phrasing like “hybrid performance framework” or “balanced metrics and narrative review” to avoid confusion and maintain credibility.

Read More: Software Doxfore5 Is Dying: What Users Need to Know

Conclusion

Moving “beyond the number” is less an ideological rejection of data and more a pragmatic correction: numbers alone distort what matters most in team performance. A well-designed hybrid framework preserves the clarity and comparability numbers provide while surfacing context, learning, and human signals that metrics miss.

The secret is structure — concise outcomes, one quantitative signal paired with one qualitative input, rubrics to tame subjectivity, transparent governance, and a disciplined pilot that measures both business outcomes and the reliability of the new system. Done correctly, this approach reduces gaming, improves long-term decision-making, and increases team wellbeing while keeping accountability intact.

Avoid jargon, document everything, and iterate rapidly based on evidence. With that approach, leaders get the best of both worlds: actionable data and the human judgment necessary to interpret it wisely.